I was reviewing the Project Connect evaluation criteria, when I noticed a bit of an oddity:

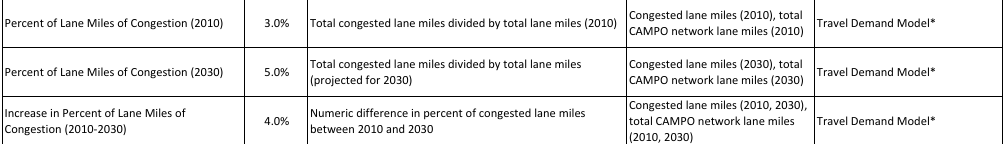

In examining congested lane miles, 2010 congestion data counts 3%, 2030 congestion projections count 5%, and the difference between the two counts 4%. Making the difference between the projections and the real-world data count more than the real-world data means that not only does 2030 count more than 2010 data, but, given two subcorridors with the same 2030 projections, the one with less congestion in 2010 is measured as worse.

Note: The columns 2010 and 2030 are measured in %’s. The weighted columns consist of the previous column, multiplied through by the percentage it counts toward the total and then by 100 for readability.

| Name | 2010 | Weighted | Increase | Weighted | 2030 | Weighted | Total |

|---|---|---|---|---|---|---|---|

| A | 3 | 9 | 17 | 68 | 20 | 80 | 157 |

| B | 17 | 51 | 3 | 12 | 20 | 80 | 143 |

To repeat, Subcorridor A and B are tied in the 2030 metric, Subcorridor B was measured as more congested in 2010, but in total, Subcorridor A is measured as more congested. You could even construct examples where A was more congested than B in 2010 and 2030, but B is measured as more congested overall:

| Name | 2010 | Weighted | Increase | Weighted | 2030 | Weighted | Total |

|---|---|---|---|---|---|---|---|

| A | 26 | 78 | 5 | 20 | 31 | 155 | 253 |

| B | 5 | 15 | 25 | 100 | 30 | 150 | 265 |

To repeat, in this example, Subcorridor A is more congested in both 2010 and 2030, but the Project Connect evaluation criteria measures Subcorridor B as overall more congested because it shows a large increase. I could potentially construct a rationale for this: perhaps the increase between 2010 and 2030 represents a trend that will continue out into the future beyond 2030. But this would not be a recommended use of the model; there are reasons we don’t use simple linear extrapolation in the model in the first place. This odd situation could be avoided by simply not including the increase as a metric at all.

What effect did this have overall on the results? I’m not sure; the individual scores for each subcorridor have not yet been released. I really can’t predict how the scores would’ve been affected. I will be speaking with Project Connect soon and hope to hear their rationale.

Update: The original Example 2 was messed up. This version is fixed (I hope!). Update 2: Improved readability by expressing things as percentages rather than decimals.

Update 3: For a little more discussion of this, you could break up the 2030 projections into two components: Base (2010) + Increase.

If you consider just 2010 data (as FTA suggests), you are going 100% for Base, 0% for Increase.

If you consider half 2010 data and half 2030 projections (as FTA allows), you are going 75% for Base and 25% for Increase.

If you consider just 2030 projections, you are going 50% for Base and 50% for Increase.

But using Project Connect’s weightings here, you are weighted at 46% Base, 54% Increase, even more weighting on the increase than if you had just used the projections themselves.

The exact same issue applies to the “Growth Index”, in which a 50%-50% weighting between Increase and Future yields a 25% weighting for the Base and a 75% weighting for the Increase, the flip weighting of what the FTA suggests.